How to stop LinkedIn from using your data to train AI!

If you haven't manually opted out of LinkedIn AI, it could be using your resume and profile information to train Microsoft, and Co.'s artificial intelligence. And no, you didn’t opt-in. Take action and stop this now.

How to stop LinkedIn from using your data to train AI

If you missed the opportunity to opt out by November 3rd, 2025, you still can by following the steps below. Note that this stops future data from being used, but it does not remove the data that’s already being used to train AI models.

- Log in to your LinkedIn account

- Click on your profile picture

- Click Settings & Privacy

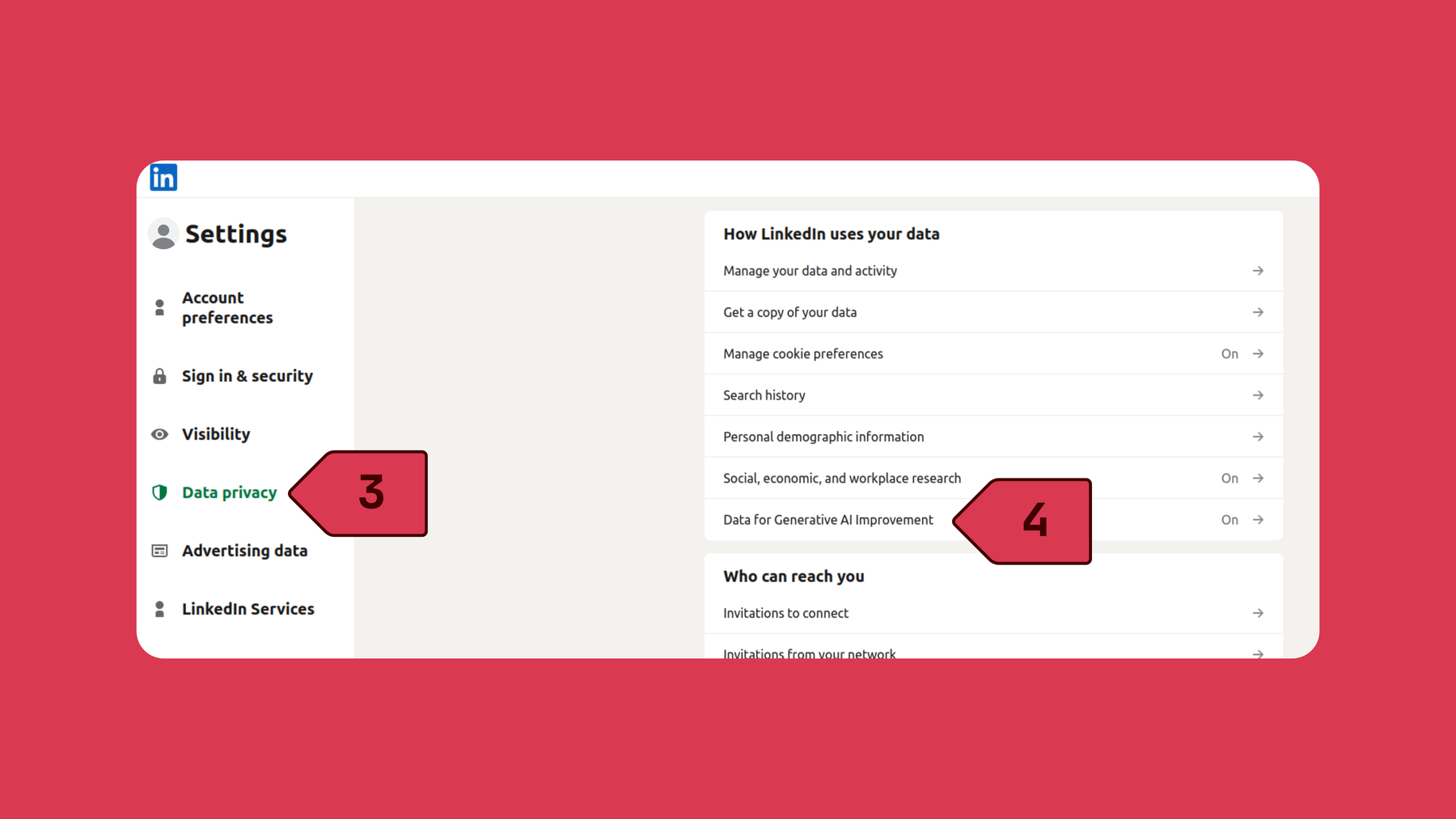

- Select Data privacy

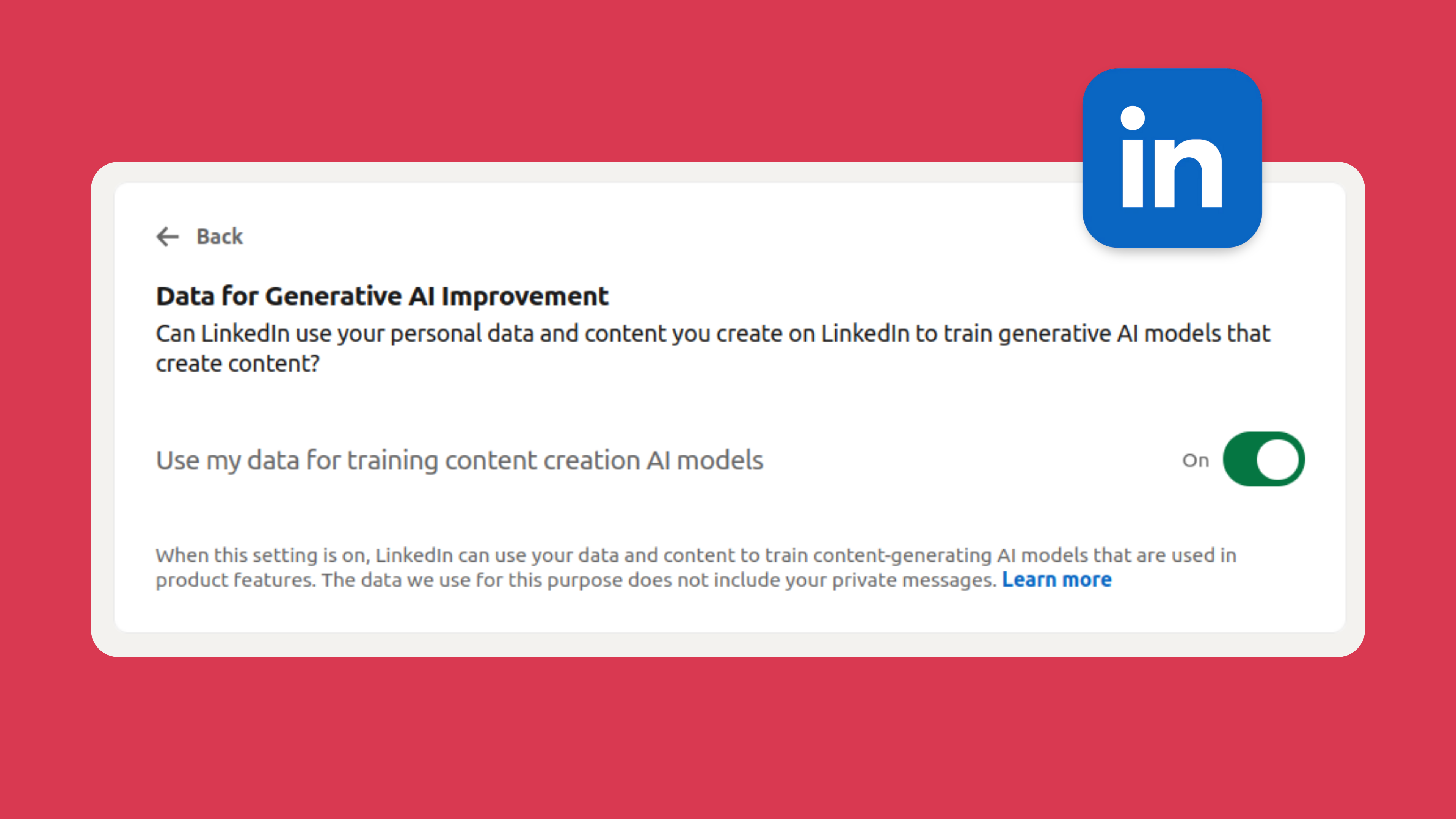

- Click Data for Generative AI Improvement

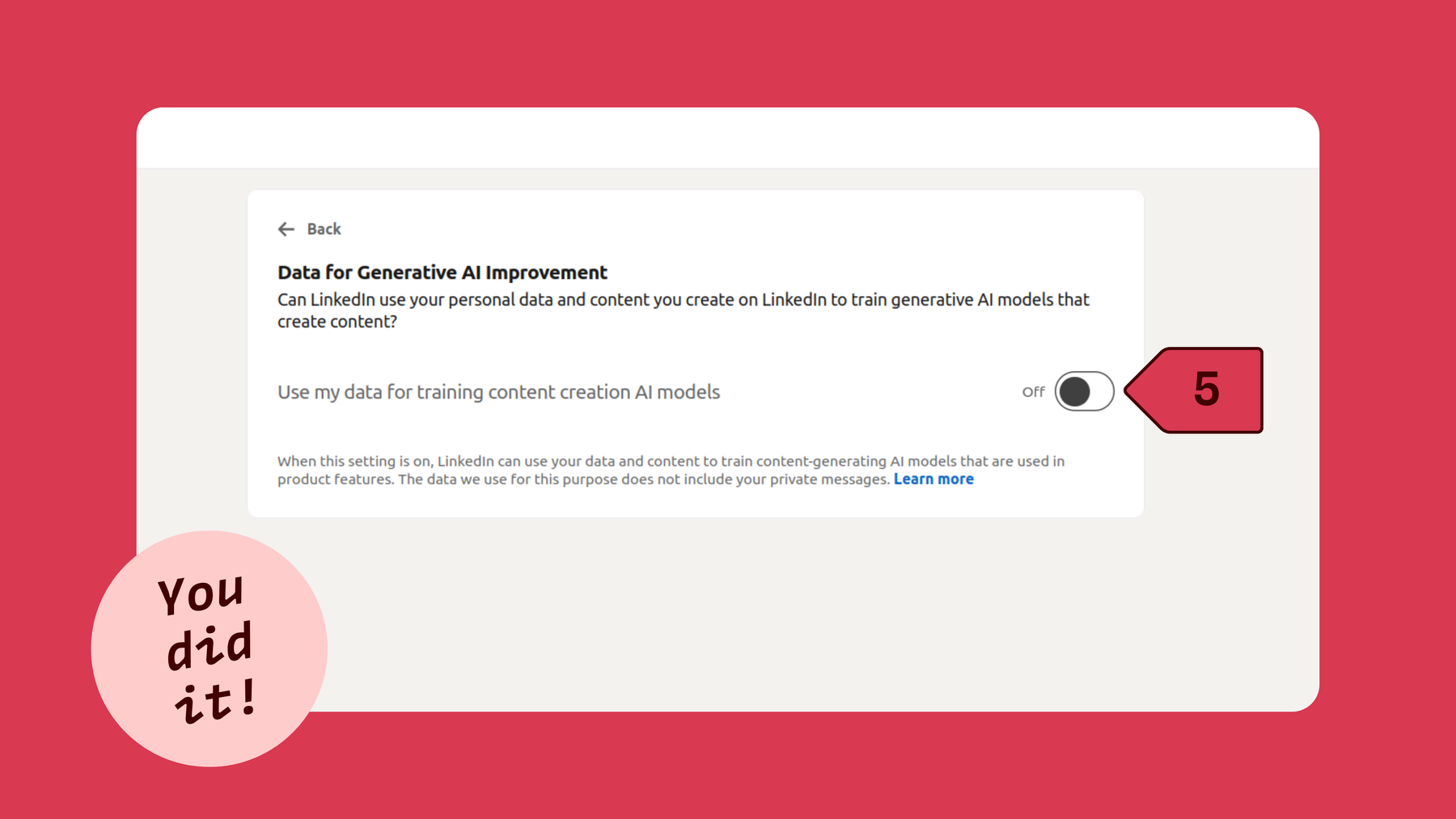

- Next to Use my data for training content creation AI models click to turn the toggle off

Quick link to opt-out: Click this link to stop LinkedIn from using your data for AI.

LinkedIn is the next big tech company planning to quietly start exploiting European user data to train AI models. Last year on September 18, LinkedIn announced that starting November 3rd, 2025, data from its users in the EU, EEA, and Switzerland, dating as far back as 2003, would be used to train its and its affiliates’ AI models. What this meant is that information like your job history, resume, and LinkedIn engagement could be collected and used to train AI models. Rather than being given the chance to choose whether to explicitly opt in or opt out of this data processing, LinkedIn chose to opt everyone in by default (like it did in 2024 in other regions), claiming the data processing as legitimate interest under the EU’s GDPR. This announcement by the tech giant which is owned by Microsoft which is being sued in Australia for misleading millions of customers into paying a 45% increase for AI add-ons and which also came under fire for taking screenshots of your screen every few seconds via Microsoft Recall, made many people worried about how their data is being used and how LinkedIn simply decides to use the data, instead of asking politely. So now, users must take action, or stand at the sideline while their data is abused.

Big win for LinkedIn: green light to use data for AI training in the EU, EEA, & Switzerland

In November 2024, LinkedIn started using the personal data from users around the world for training its generative AI models. At the time, Europeans felt safe, relying on the GDPR which seemed to protect their data from being abused. Because back then users’ data residing in the EU, EEA, and Switzerland were excluded. But as announced, since the end of 2025, LinkedIn has been using the data of users in these areas for AI training as well – a win for LinkedIn, but a big loss for its users’ privacy.

Because of the alarming announcement, privacy experts in the EU have warned that users must manually go to their LinkedIn account settings and toggle off the option “Data for generative AI improvement”.

What data does LinkedIn collect for AI training?

If you did not manually opt out to have your data used for AI training before the Nov 3rd 2025, all data dating back to 2003, which we have listed below, can be used for training LinkedIn and its affiliates’ AI models. If you haven’t yet you can still opt out, but the data collected before opting out will not be removed from LinkedIn’s AI model, meaning your personal data will remain permanently in the training datasets.

-

All your profile data: names, profile picture, current and past jobs, education, skills, location, endorsements, publications, patents, and recommendations.

-

Your content: posts you’ve written or shared, articles, comments, contributions, and poll responses.

-

Your job data: your resumes, jobs you’ve applied to, application data, and replies to job screening questions.

-

Your group data: activity in groups and messages.

-

Your feedback: the feedback you provide, including responses and ratings.

Note: LinkedIn has stated that your private messages, login details, payment methods, and highly sensitive data will be excluded from the data collection.

How to opt out of LinkedIn’s use of your data to power their AI projects on any device

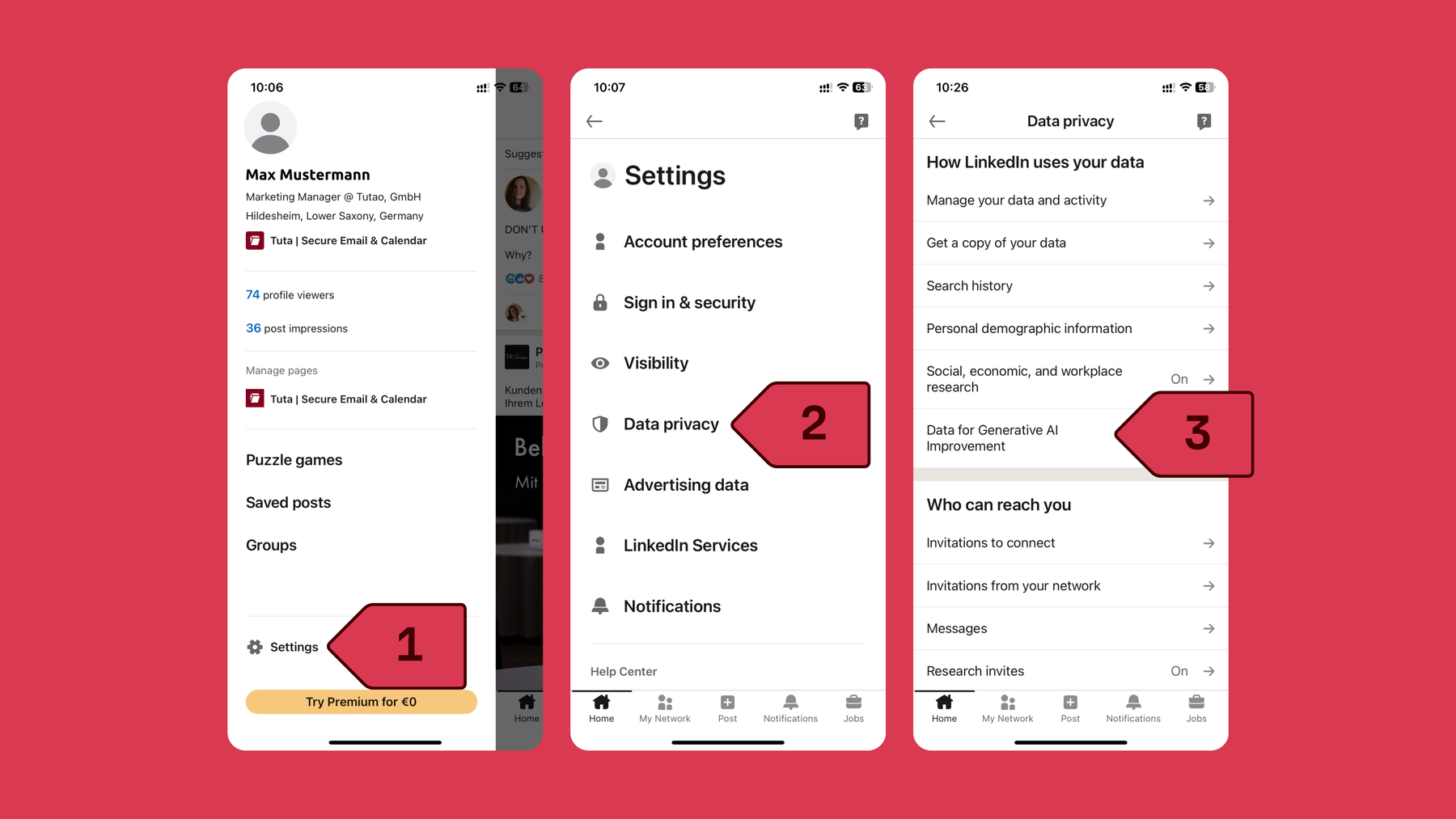

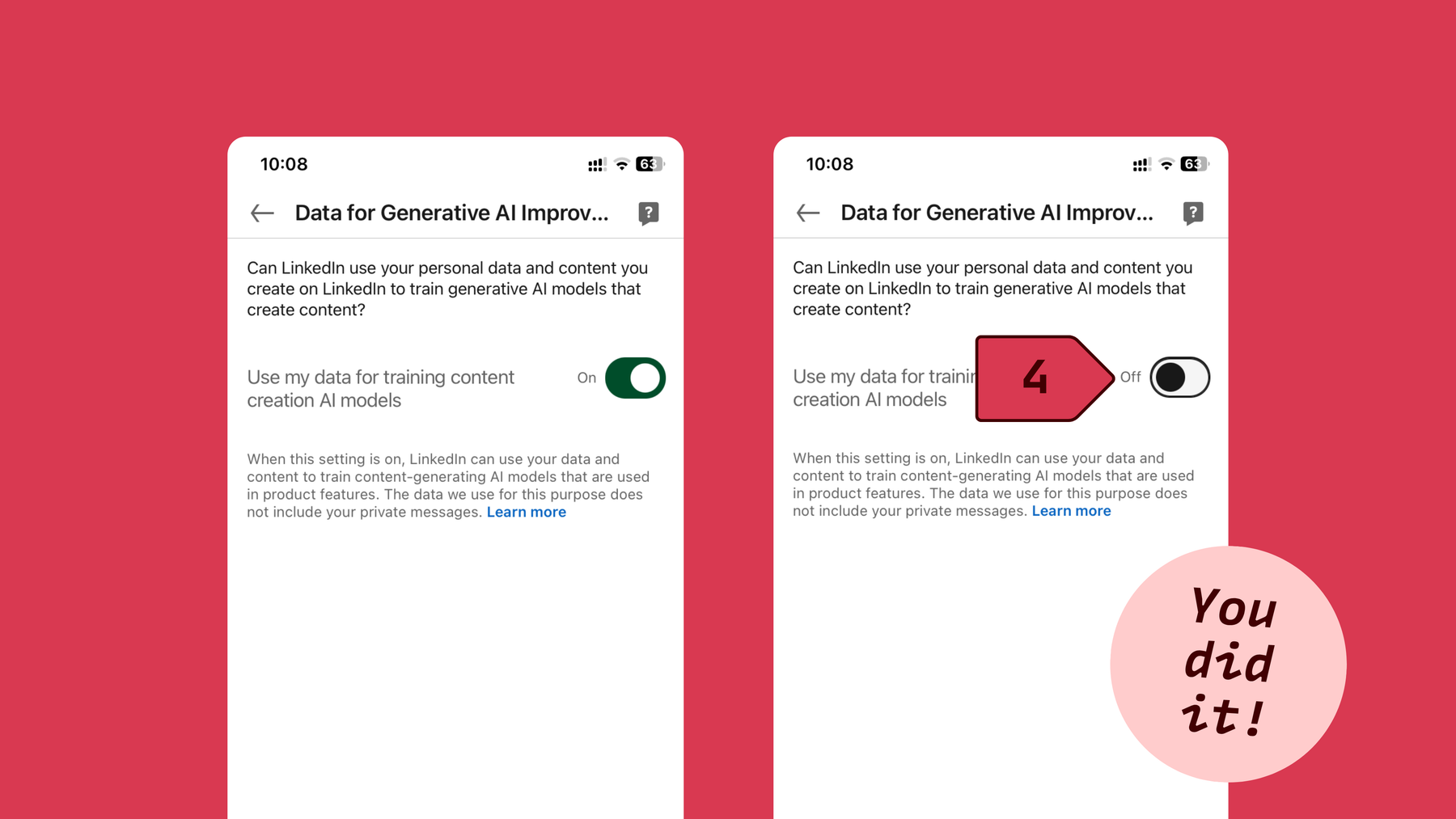

How to opt out of LinkedIn AI on iPhone

Follow these steps to opt out of LinkedIn using your data to train AI on the iPhone app. Screenshot: LinkedIn.

- Open the LinkedIn app on your phone and log in to your account

- Click on your profile picture

- Click to open Settings

- Select Data privacy

- Click Data for Generative AI Improvement

- Next to Use my data for training content creation AI models click to turn the toggle off

Opt out of LinkedIn’s AI on Android

- Open the LinkedIn app on your Android and log in to your account

- Click on your profile picture

- Click to open settings

- Select Data and Privacy

- Click Data for generative AI improvement

- Next to Use my data for training content creation AI models click to turn the toggle off

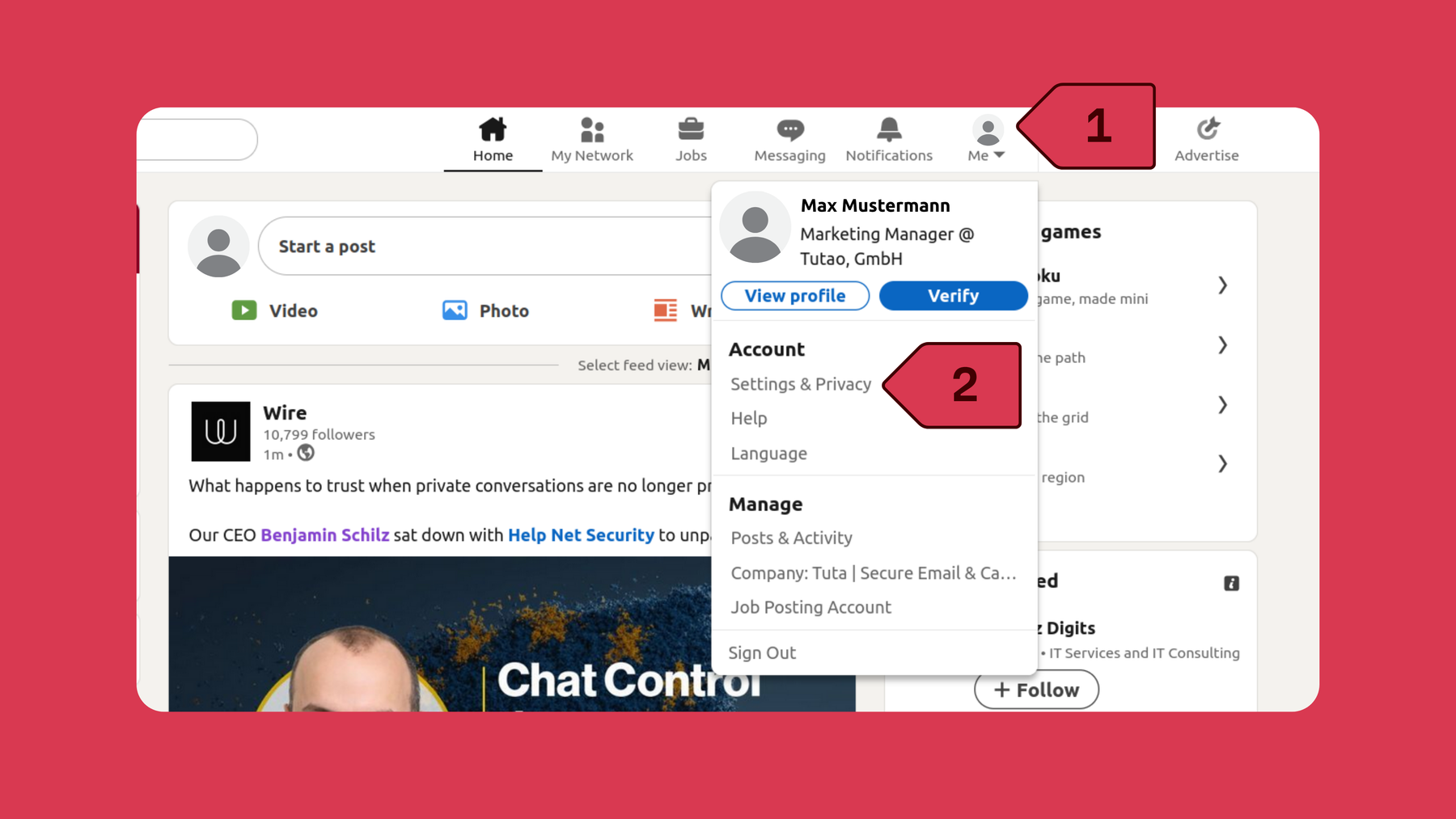

Stop LinkedIn from using your data for AI training from a browser

Follow these steps to opt out of LinkedIn using your data for training content creation AI models from a web browser. Screenshot: LinkedIn.

- Log in to your LinkedIn account

- Click on your profile picture

- Click Settings & Privacy

- Select Data privacy

- Click Data for Generative AI Improvement

- Next to Use my data for training content creation AI models click to turn the toggle off

In addition to opting out of future data being used for AI purposes, you can also fill out this LinkedIn Data Processing Objection Form to reject or request a restriction to the processing of your personal data.

Past shock at LinkedIn’s sneaky introduction of AI opt-in

In 2024, LinkedIn faced backlash when its users learned that they had been opted in by default to having their data used for training AI models. More concerning, the opt-out toggle was not a new setting but came as a shock to LinkedIn users who were not aware that their data was already being used to train AI.

On September 18th, 2024, 404 Media reported that LinkedIn didn’t even update its privacy policy before it started scraping user data. When 404 Media reached out to LinkedIn about this, the company told 404 that it would update its terms of service “shortly”. The response from LinkedIn was extremely questionable at best. Then, on the same day, LinkedIn quietly released a blog post written by its general counsel, Blake Lawit, announcing changes to its privacy policy and terms of service that would come into place on November 20, 2024. The changes made to LinkedIn’s User Agreement and Privacy Policy explained how user data would be used for AI by the company.

One would have expected the Microsoft-owned platform to announce the change in its privacy policy first before scraping user data, but in this case, it seemed otherwise.

Privacy vs AI

LinkedIn now being able to collect European users’ data for AI training may come as news, but unfortunately, this is a common practice and your data is probably being used for AI training from other sites, too. Even in the EU, which protects its citizens’ data (to an extent) with the GDPR, tech giants still find loopholes to collect user data to develop their AI models.

For example, Meta’s initial plan to use EU user Facebook and Instagram data to train Meta AI got put on hold, but as of May 27th, 2025, Meta has been using European users’ Facebook and Instagram public data for AI training. Unfortunately for Facebook users in the other parts of the world, like the US, where there are no data privacy laws like the EU’s GDPR, Meta-owned Facebook has a much bigger reach on user data and can basically use it how it wants. For example, users’ data is being used for AI training, and people have no way to opt out. This not only raises massive data privacy concerns but also highlights the user’s lack of choice and freedom when it comes to their data, and how it’s used.

Meta is not the only tech behemoth to mistreat user data, of course, Google and AI email writers do this as well. A Gmail user shared a conversation they had with Google’s Gemini AI chatbot. What was shocking about the conversation was that the data the chatbot used came from the Gmail user’s emails. Even though the Gmail user had never given access or opted in to allow the Large Language Model access to their personal mailbox.

What we learn from the two examples above is that these Big Tech providers only think about increasing their own benefit, instead of acting ethically correct. When it comes to big tech, profit, and technical advancements like this crazy rush to board the AI train (often without your consent), all boundaries are being skipped.

Final thoughts

LinkedIn’s changes to how it handles user information may come as a surprise to some people using the platform, but sadly this is nothing out of the ordinary when it comes to how many tech companies handle data. LinkedIn is yet another tech company that joined the AI trend and uses user information to train its models.

If you visit Tuta’s blog regularly, you will know that when it comes to big tech, they operate on the business model of profiting off your personal information. Their carefree approach to privacy follows a tried and true method by collecting as much data as possible, selling it to advertisers, who in return target you with ads. Rinse, wash, repeat. A perfect example is how Meta and Google offer their extensive products for free – but the price is paid with your data, not with money. And now, with the race of every company to utilize AI, your data is not just used to profit from advertising, but appears even more valuable to these companies.

This craze to develop AI and use your data is a trend that is not going away. What we can learn is that popular online companies, like Meta, Google, and Microsoft, to mention a few, will always put profits first and operating ethically second.

With that being said, if you choose to keep using these platforms, it’s advisable to stay aware and up to date of any changes made to their terms of use and privacy policies, which is a hassle for those of us who don’t speak legal-ese but it is necessary. Especially if you are concerned about your data and privacy.

And if you are truly worried about your privacy, we’d recommend ditching services like Gmail or Facebook for good.

With growing concern over how companies deal with data and user privacy, there has been an increase in the development of amazing privacy-focused alternatives. And people are increasingly going a step further by choosing to De-Google and stopping to use big tech services entirely.

Luckily, in 2026, it is possible! For example, replacing Gmail with Tuta Mail, or replacing Meta’s WhatsApp with better messenger platforms like Signal.

Choose Tuta for no AI and no ads

Want to make sure that your emails are NOT used to train AI? Just switch to Tuta Mail – as an end-to-end encrypted email and calendar provider, we will not join the AI train!

Based in Germany and operating under strict data privacy laws, Tuta is open source, GDPR-compliant, and fully end-to-end encrypted.

Unlike popular email providers, Tuta Mail encrypts as much user data as possible, now with the world’s first post-quantum encryption protocol! With no links to Google, Tuta Mail has no ads, does not track its users, and is designed with privacy in mind.

Take back your privacy, and ensure your emails are not used for AI training.