Gmail, Microsoft, Meta, LinkedIn: They already scan your messages - voluntarily!

Voluntary Chat Control is already in place since 2021, named Regulation (EU) 2021/1232. Similar to scanning obligations in the UK and the USA, this regulation allows Big Tech to scan all your data. But what do services scan based on this regulation, do they also scan old messages, and what does this mean for data protection and privacy? A deep dive.

And if you believe this does not matter to you because you have nothing to hide, check out this Dad’s story of how Google deleted his entire account because he had send a naked picture of his son to their family doctor.

Now, that I have you attention, check whose scanning your emails and messages and what you can do about it!

Voluntary Chat Control: Regulation (EU) 2021/1232

For more than three years, the European Union has been discussing the regulation to detect Child Sexual Abuse Material (CSAM), named Chat Control because of its immense surveillance implications. And while privacy advocates have succeeded in removing the obligation to break end-to-end encryption in the current Chat Control proposal, the EU has already put a voluntary form of Chat Control into place that’s heavily used by US Big Tech services: the regulation 2021/1232.

Gmail, Facebook, Instagram, WhatsApp, Microsoft, LinkedIn, Apple – they all scan your data based on this regulation, often with a tool developed by Microsoft, called photoDNA. Big Tech only scans newly incoming messages and emails, they do not scan old messages – these have been scanned in the past already.

The EU Commission has requested data about the scans from Big Tech to prove that the scanning is proportionate. But the EU Commission was unable to prove its point:

On the proportionality of Regulation (EU) 2021/1232, the question is whether the Regulation achieves the balance sought between, on the one hand, achieving the general interest objective of effectively combating the extremely serious crimes at issue and the need to protect the fundamental rights of children (e.g. dignity, integrity, prohibition of inhuman or degrading treatment, private life, rights of the child, etc.) and, on the other hand, safeguarding the fundamental rights of the users of the services covered (e.g. privacy, personal data protection, freedom of expression, effective remedy, etc.). The available data are insufficient to provide a definitive answer to this question.”

Instead of proving that the regulation is proportionate – because mass scanning of personal data is a severe intrusion into every citizen’s private life and must not be allowed “just in case” – the EU Commission simply states that the scanning of messages is not unproportionate, and therefore fine:

It is not possible nor would it be appropriate to apply a numerical standard when assessing proportionality in terms of the number of children rescued, given the significant negative impact on a child’s life and rights that is caused by sexual abuse. Nonetheless, in light of the above, there are no indications that the derogation is not proportionate.”

This reversal of the burden of proof has been heavily criticized by Netzpolitik as well as by Patrick Breyer, former EU parliamentarian and lawyer, who calls the EU Commission’s conclusion “legal nonsense”.

But let’s look at the data ourselves!

Big Tech scanning billions of data sets

Only two service providers reported concrete figures to the EU Commission on the scale of voluntary CSAM scanning: Microsoft and LinkedIn.

-

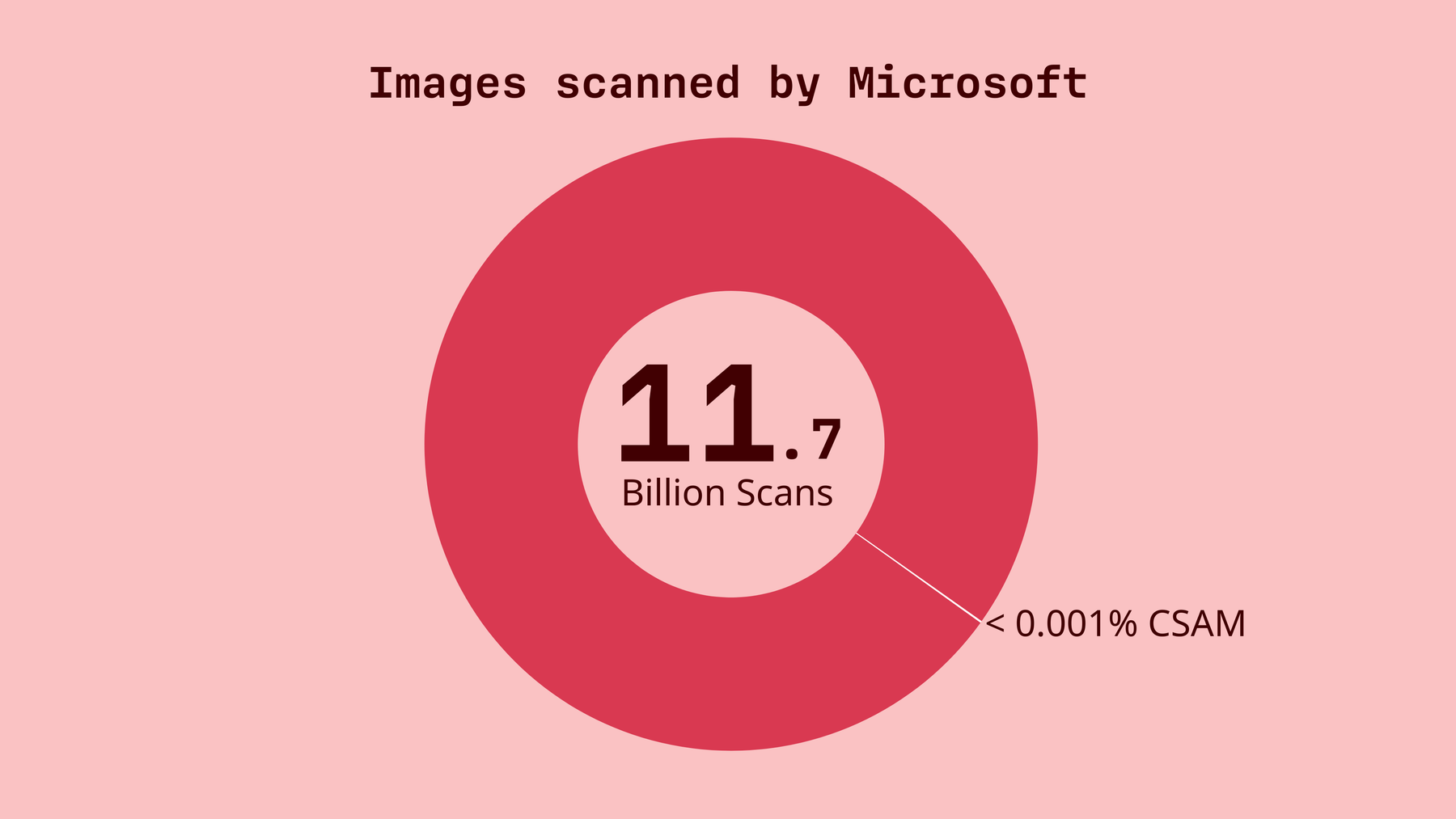

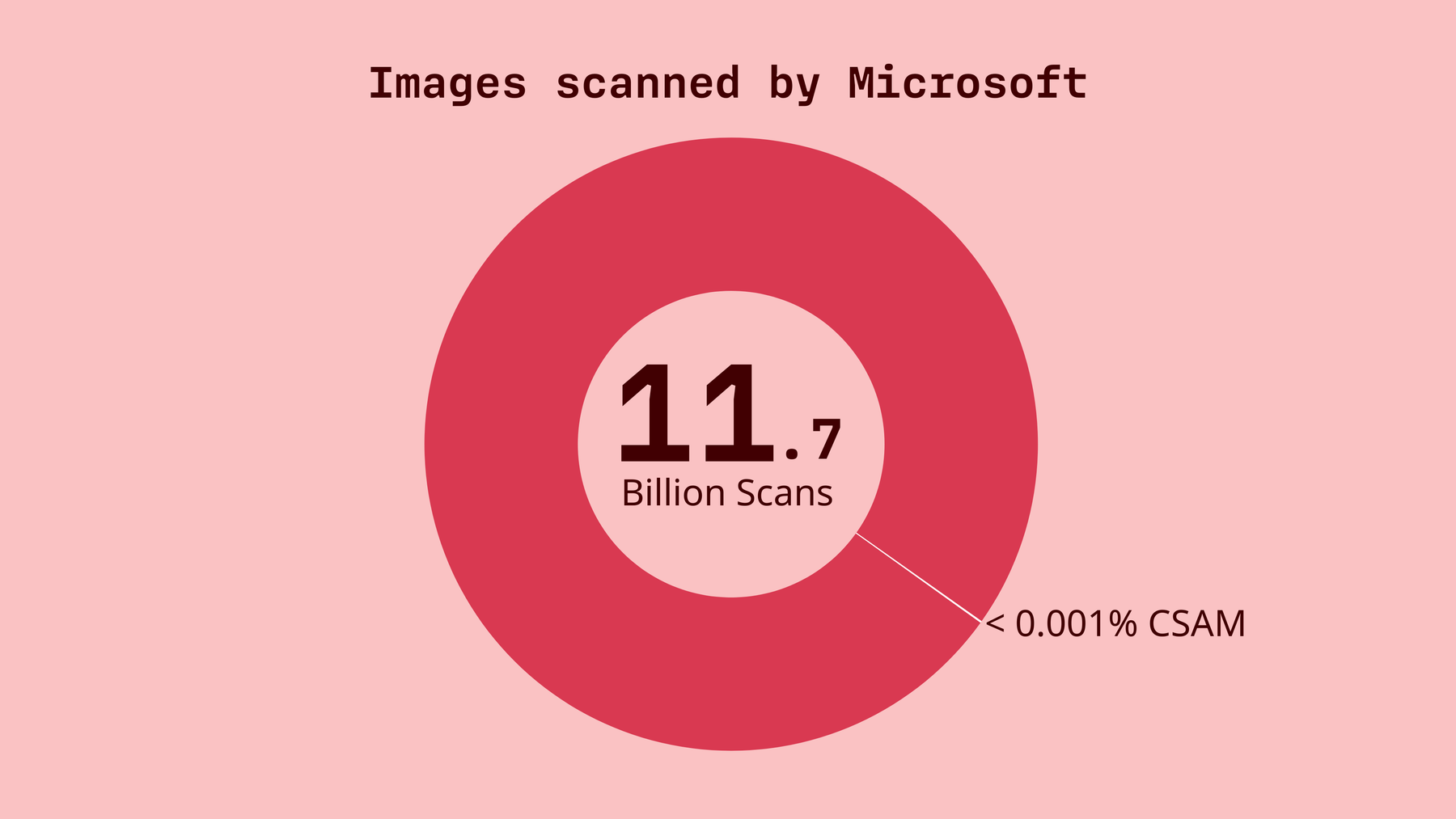

Microsoft scanned more than 11.7 billion images and videos worldwide in 2023 and just under 10 billion in 2024. They did not provide numbers for the EU only.

-

In 2023, Microsoft flagged 32,000 data sets as potential child sexual abuse material, including over 9,000 data sets in the EU. This comes down to 0.0002735 %, or 1 hit per 365,000 scans.

-

In 2024, Microsoft flagged 26,000 data sets as potential child sexual abuse material, including over 5,800 data sets in the EU. This comes down to 0.00027083 %, or 1 hit per 369,000 scans.

-

LinkedIn’svolumes were far smaller, but all scans concerned European content.

-

In 2023, over 24 million images and over 1 million videos were scanned, resulting in 2 images flagged and no videos being flagged as potential child sexual abuse material. This comes down to 0.00000833 %, , or 1 hit per 12 million scans.

-

In 2024, over 22 million images and over 2 million videos were scanned, resulting in 1 image and no videos being flagged as potential child sexual abuse material. This comes down to 0.00000417 %, or 1 hit per 24 million scans.

In both cases, mass scanning produced only minimal results, yet, the EU Commission calls the measure of voluntary Chat Control proportionate.

Google did not disclose how many contents were scanned, only the outcomes, but based on the fact that Google is one of the biggest online service, the numbers most likely go into the millions. In 2023 and 2024, Google flagged 1,558 and 1,824 data sets respectively. Given Google’s size, scan volumes are likely comparable to Microsoft’s, meaning results also remain well below the per-mille range.

Meta with services such as Facebook, Instagram, and WhatsApp stands out sharply with much higher figures. In 2023 and 2024, the Silicon Valley tech giant flagged 3.6 million and 1.5 million images and videos as potential CSAM in the EU.

A similar pattern becomes visible when looking at user reports: At Google, 297 (2023) and 216 (2024) users reported illegal material. At Meta, 254,500 (2023) and 76,900 (2024) users reported illegal material. In the Commission’s report, there’s no explanation given why these numbers differ so massively.

These numbers, when looking at them critically, do not at all prove that the mass scanning of everybody’s messages is proportionate. And the upcoming Chat Control draft, which will soon be discussed in the Trilogue, could allow even more extensive scanning - still voluntarily, but with significant consequences for your privacy.

The Good and the Bad

The good thing about the current Chat Control proposal is that scanning of emails and chat messages will remain voluntary. The proposal will not force end-to-end encrypted email providers like Tuta Mail to backdoor the encryption. So at Tuta, your data will remain safe. We at Tuta are here to fight for your right to privacy; when the Chat Control debate was at its height in 2024, we even threatened to sue the EU for neglecting everybody’s right to privacy, which now is not necessary anymore.

However, voluntary Chat Control at its core is not just fundamentally bad, it’s wrong: Without proving that the measure is proportionate for protecting children, the EU Commission sacrifices everybody’s personal life by opening up an immense intrusion into everybody’s privacy by Big Tech – and Big Tech doesn’t need to be asked twice.

Thus, Gmail, Microsoft, LinkedIn, Meta – they all scan your data happily – without having any problem with it. This allows them to not just scan your data for CSAM, but potentially for anything else they would want; to know you inside out as a Google CEO once famously stated, and to create a profile about you to post targeted advertisements.

Switch to privacy

Yet, you have a choice: There are services that offer free, secure email, chat, and social media solutions, without any tracking or profiling.

Here are some great Big Tech alternatives from Europe that focus on privacy.

Say “No” to Big Tech, and their data harvesting. Say “Hi” to privacy!