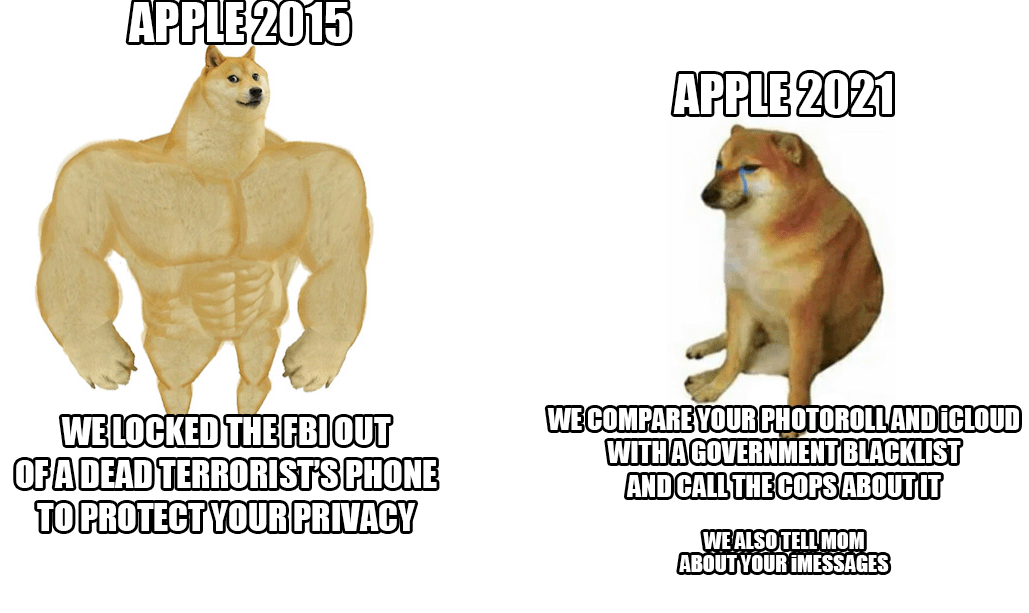

Rotten Apples: iOS 15, personal privacy, and the on-going fight against CSAM.

“iOS 15 In touch. In the moment.”

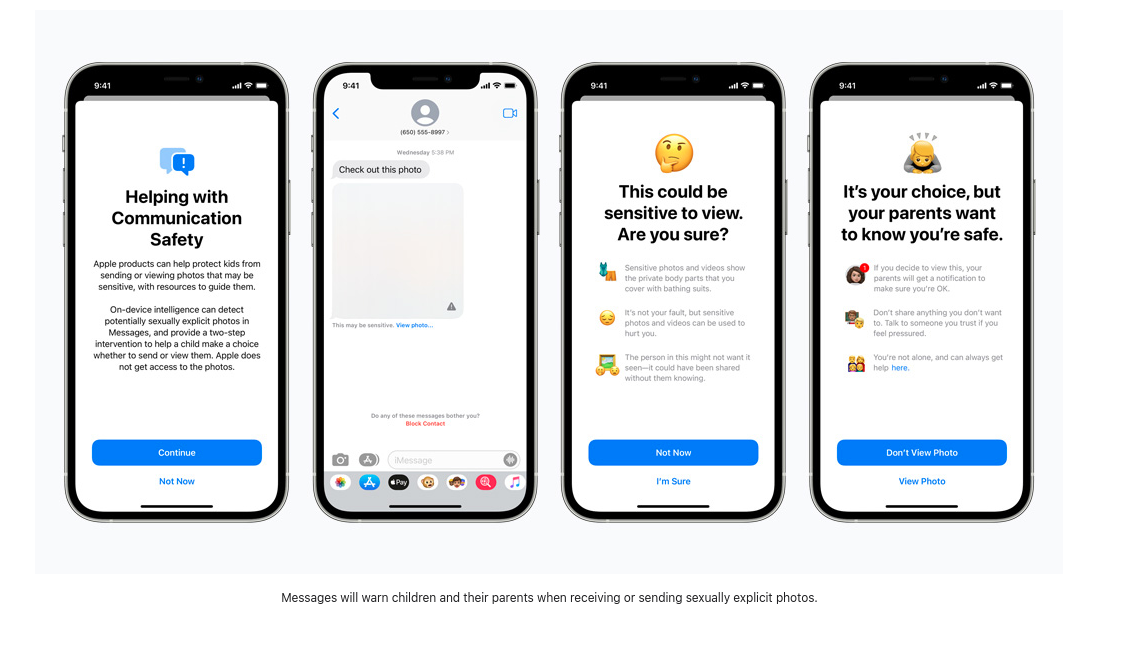

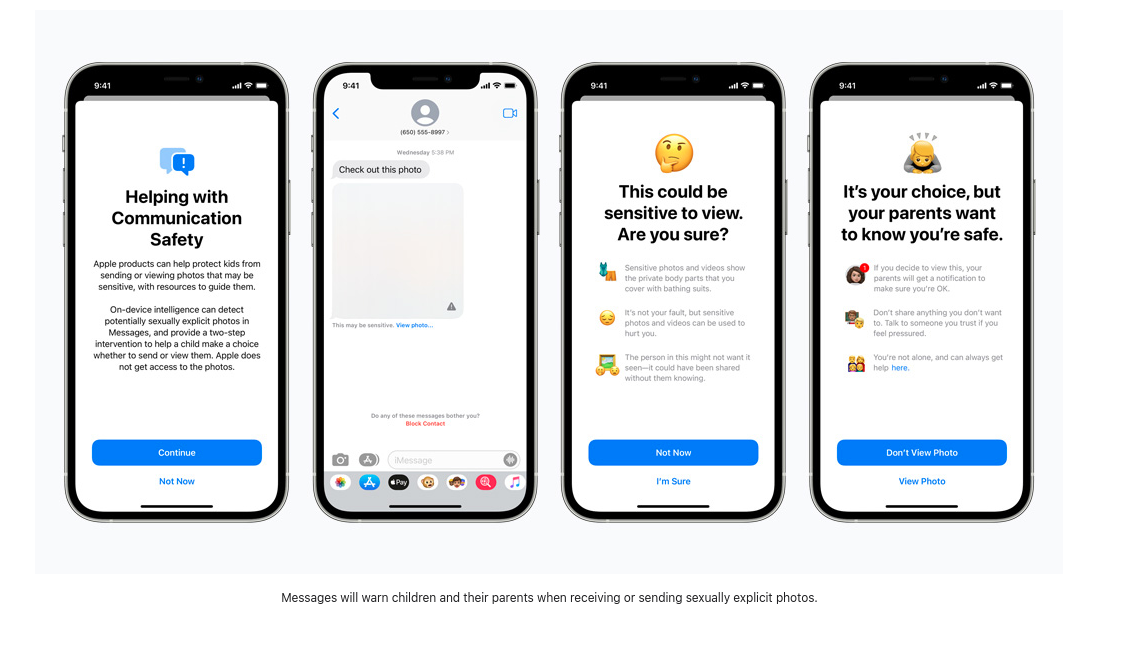

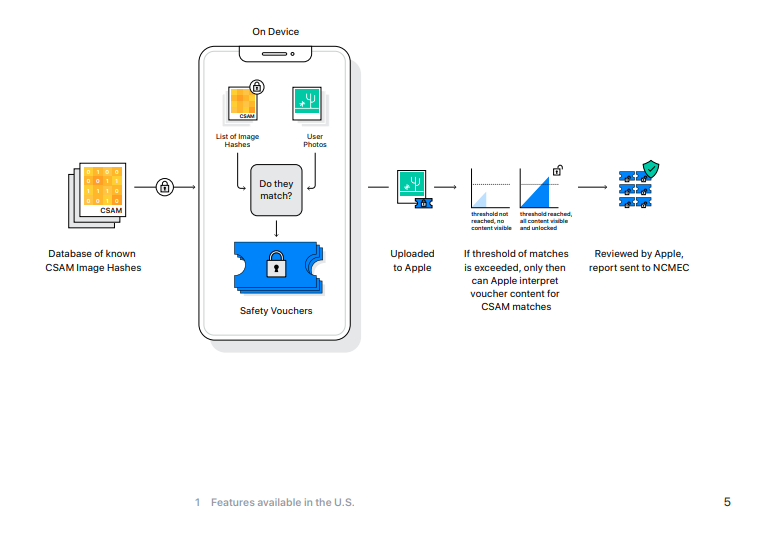

With this new operating system update it looks like we are getting three new features which according to Apple will “help protect children from predators who use communication tools to recruit and exploit them, and limit the spread of Child Sexual Abuse Material (CSAM).” The first aims to improve “Communication safety in Messages” which adds new parental control tools and warnings which will appear when sexually explicit material is sent or received. The second is a feature which will use a CSAM detection algorithm to scan the Photos app for image hashes which match known CSAM hashes. The third new child protection feature introduces search “guidance” which provides users with resources to report CSAM and will actively intervene if users search for a term which is related to CSAM.

Let’s take a moment and unpack the details of what these features aim to do and how they do it.

No more unsolicited d *ck pics.

A recent study from Thorn reports that “Self-generated child sexual abuse material (SG-CSAM) is a rapidly growing area of child sexual abuse material (CSAM) in circulation online and being consumed by communities of abusers.” Apple’s new “Expanded Protections for Children” aims to slow the growth of this practice. The sharing of “nudes” between romantic partners often spread throughout a peer group and can end up online in cases of “revenge porn”. Apple will now begin presenting children (or users with these safety features enabled) with warnings about viewing or sending explicit images. In the event that an image is viewed or sent, the parent’s device will receive a notification message about the activity.

The incoming images are scanned against by an on-device machine learning program which flags suspected material and “learns” to determine if future images fall into the same category. Who controls this algorithm and what impact users can have to influence how it reports suspected images remains undisclosed. Apple writes that this “feature is designed so that Apple does not get access to the messages.” So with this new update, Apple will begin screening encrypted messages which are sent via iMessage in order to warn parents and teens about harmful online behavior.

On the surface this may not appear dangerous, but if this same scanning technology were employed to scan encrypted messages for dissenting political opinions, minority religious languages, or discussions of sexual orientation, this new on-device monitoring software could be easily altered and abused.

Scanning to Stop the Spread.

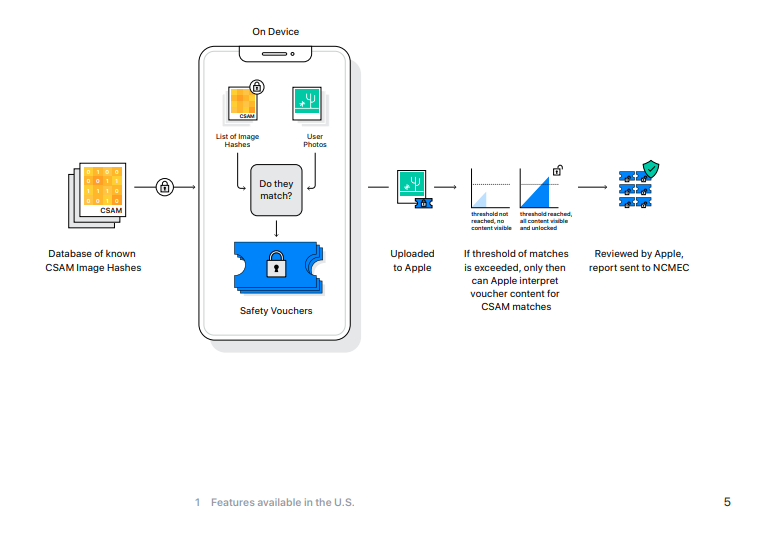

According the Electronic Frontier Foundation, “although Apple holds the keys to view Photos stored in iCloud Photos, it does not scan these images.” This will change with iOS 15 and if you are currently backing up your photos in Apple’s iCloud, these photos will be hashed and those values will be compared to those of known CSAM.

If an iOS device has enabled the iCloud backup feature this scanning will first begin on the local machine. The new iOS 15 includes a version of the National Center for Missing and Exploited Children’s CSAM hashed image database, and non-encrypted images taken on an iPhone will have their hashes (a *hopefully * unique numeric value representing the image) compared to those of the NCMEC’s database. In the event that a match occurs, the photos are sent to human reviewers at Apple. If these reviewers can confirm the match, then the image is sent to NCMEC, law enforcement, and the user’s account will then be deactivated.

If you are using the iCloud to backup your photos, your photos will be scanned against this database, without probable cause or suspicion that you may have committed or been involved in any crime.

[Don’t] Look at this Photograph.

The final new children’s safety feature is an update to Siri and Search that will offer guidance on how to report cases of CSAM as well as “intervene” if users are searching for terms related to CSAM.

The exact wordlist is not public and we do not know if user reported terms will be used to train the machine-learning search monitor.

There is very little information available as to what kind of terms will trigger these warnings and what happens when they are ignored. In the case of image hashing review, an Apple employee will determine matching results, but we do not know what further measures are taken with search results. When viewing the official Apple press page for these new features, the discussion of monitored search terms consists of a brief four sentences. It will also be interesting to see if this list grows based upon user reported terms or how Apple chooses to train this algorithm.

If you have nothing to hide…

Between these three new features, Apple users and those who send messages and pictures with them, are being subjected to the scanning of their encrypted messages, monitoring of their private search terms, and all of this occurring without any suspicion or evidence of a crime. It is one thing for Apple and other Big Tech companies to scan the hashes of images stored on their servers. After all, these servers are company property and you don’t have to use them. But pushing these new surveillance features onto local devices ignores the fact that you, the device owner, have paid hundreds of dollars (or Euros) for a device which is monitoring your behavior. You don’t have much choice in what processes are running a piece of your personal property. This ‘improvement’ strays even more towards the product-as-service model which has already become mainstream in the world of proprietary software.

With this new version of iOS Apple is undermining the security of their once lauded messaging platform. An end-to-end encrypted messaging app which scans the images once they reach the destination device is a dangerous lie that does nothing to protect the security and privacy of Apple users. How this behavior can be applied by nation states which don’t respect human rights is a recipe for disaster.

IOS 15 sets a dangerous standard for the future of end-to-end encryption.

Protecting the children will be the mantra that leads us into a world of increased surveillance and the dissolution of personal privacy. Yes, CSAM is abhorrent and should be prosecuted to the full extent of the law, but the existence of CSAM does not warrant the abandonment of a nuanced discussion surrounding privacy, data encryption, sexual violence, and surveillance.

Matthew Green warns of the aftermath of these changes writing, “Regardless of what Apple’s long term plans are, they’ve sent a very clear signal. In their (very influential) opinion, it is safe to build systems that scan users’ phones for prohibited content. That’s the message they’re sending governments, competing services, China, you.”