CSAM Scanning: EU Commission's lies uncovered.

We have analyzed telecommunication surveillance orders. Data shows that the EU's CSAM scanning is completely disproportionate and must be stopped.

In its draft law to combat child sexual abuse, the EU Commission describes one of the most sophisticated mass surveillance apparatuses ever deployed outside China:CSAM scanning on everybody’s devices.

As an email service we regularly receive monitoring orders from German authorities. We have analyzed this data to find out whether monitoring orders are issued to prosecute child molesters.

EU CSAM scanning

The EU Commission’s draft regulation on preventing and combating child abuse is a frontal attack on civil rights. And the EU Commission is pushing for this draft to become law with massive exaggerations.

As citizens we can expect more from the EU Commission. The least we can ask for when the Commission wants to introduce surveillance mechanisms that will immensely weaken Europe’s cybersecurity would be honest communication.

No one denies that child sexual abuse is a big issue that needs to be addressed. But when proposing such drastic measures like CSAM scanning of every private chat message, the arguments must be sound. Otherwise, the EU Commission is not helping anyone – not the children, and not our free, democratic societies.

In the past, the EU Commission has already managed to push three arguments into the public debate to swing the public opinion in favor of scanning for CSA material on every device. But the arguments are blatantly wrong:

- One in Five: The EU Commission claims that One in Five children in the EU would be sexually abused.

- AI-based surveillance would not harm our right to privacy, but save the children.

- 90 % of CSAM would be hosted on European servers

The EU Commission uses the ‘One in Five’ claim to justify the proposed general mass surveillance of all European citizens.

Yes, child abuse is an immense problem. Every expert in the field of child protection will agree that politics need to do more to protect the most vulnerable in our society: children.

Nevertheless, the question of proportion must be looked at very closely when it comes to CSAM scanning on our personal devices:Is it okay that the EU introduces mass surveillance mechanisms for all EU citizens in an attempt to tackle child sexual abuse?

The German association “Digitale Gesellschaft” has published a video that is aiming at answering the question of proportionality as well; it is worth watching!

To find an answer to the question of proportionality, we need to blank out the propaganda of the EU Commission and instead ask several questions:

1. One in Five

Where does the number ‘One in Five’ come from?

There is no statistic to be found that supports the ‘One in Five’ claim. This figure is prominently put on a website by the Council of Europe, but without giving any source.

According to the World Health Organization (WHO) 9.6% of children worldwide are sexually abused. Contrary to the EU figures, this data is based on a study, an analysis of community surveys.

Nevertheless, let’s ignore the European Commission’s exaggeration of affected children as the number published by the WHO is still very high and must be addressed.

The number published by the WHO suggests that more than 6 million children in the EU suffer from sexual abuse.

Consequently, we can agree that the EU must do something to stop child sexual abuse.

2. Can surveillance help tackle child abuse?

Where does the abuse take place?

Another question that is very important when introducing surveillance measures to tackle child sexual abuse is the one of effectiveness.

If monitoring of our private communication (CSAM scanning) would help save millions of children in Europe from sexual abuse, many people would agree to the measure. But would that actually be the case?

On the same website that the EU Commission claims that ‘1 in 5’ children are affected, they also say that “Between 70% and 85% of children know their abuser. The vast majority of children are victims of people they trust.”

This begs the question: How is scanning for CSAM on every chat message going to help prevent child sexual abuse within the family, the sports club or the church?

The EU Commission leaves this question unanswered.

How many monitoring orders are about protecting children?

To find out whether monitoring of private messages for CSA material may help tackle child sexual abuse, we must take a look at actual monitoring data that is already available.

As an email provider based in Germany we have such data. Our transparency report shows that we are regularly receiving telecommunications surveillance orders from German authorities to prosecute potential criminals.

One could think that Tutanota as a privacy-focused, end-to-end encrypted email service would be the go-to place for criminal offenders, for instance for sharing CSAM.

In consequence, one would expect the number of court orders issued in regard to “child pornography” to be high.

In 2021 we received ONE telecommunications surveillance order based on suspicion that the account was used in regard to “child pornography”.

This is 1,3% of all orders that we received in 2021. More than two thirds of orders were issued in regard to “ransomware”; a few individual cases in regard to copyright infringement, preparation of serious crimes, blackmail and terror.

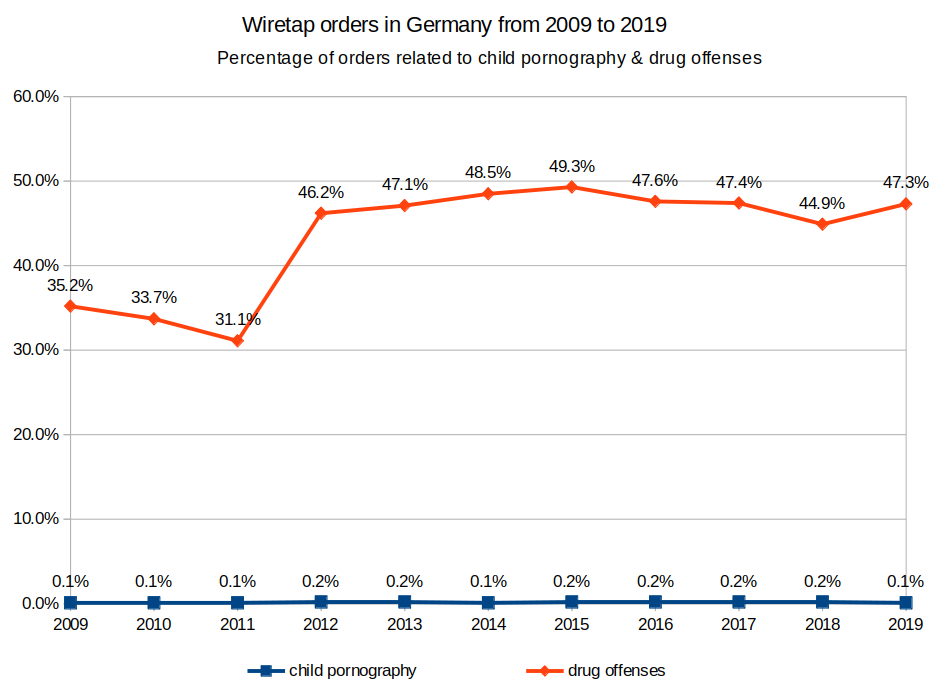

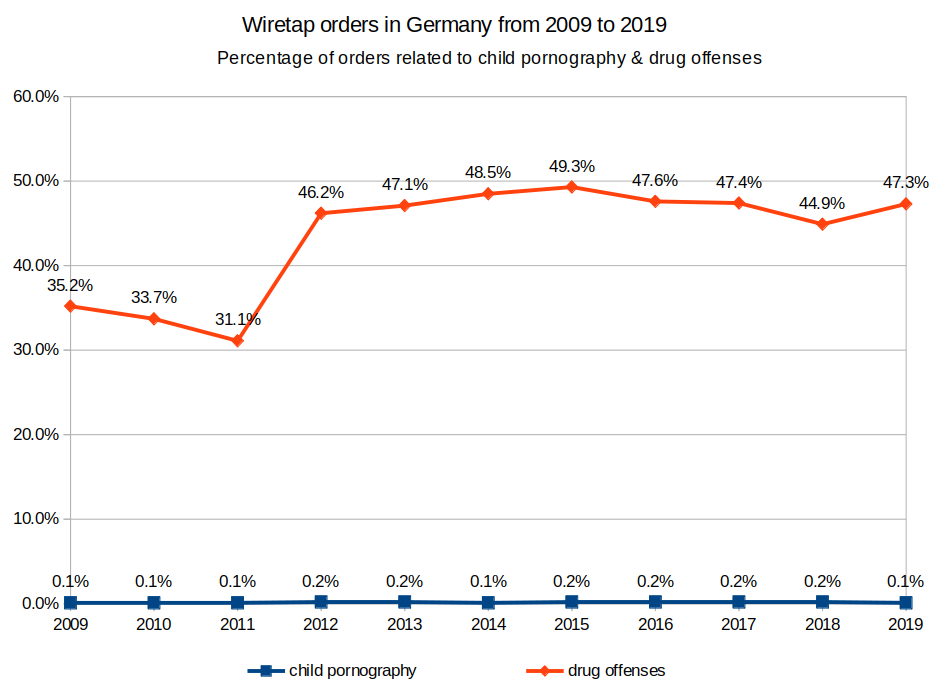

Numbers published by the German Federal Office of Justice paint a similar picture: In Germany, more than 47.3 per cent of the measures for the surveillance of telecommunications according to § 100a StPO were ordered to find suspects of drug related offenses in 2019.Only 0.1 per cent of the orders - or 21(!) in total - where issued in relation to “child pornography”.

In 2019, there were 13.670 cases of child abuse according to the statistic of the German Federal Ministry of the Interior in Germany.

If we take these numbers together, there were 13.670 children abused in Germany in 2019. In only 21 of these cases a telecommunications surveillance order was issued.

It becomes obvious that the monitoring of telecommunications (which is already possible) does not play a significant role to track down perpetrators.

The conclusion here is obvious: ‘More surveillance’ will not bring ‘more security’ to the children in Europe.

3. Europe - a hub for CSAM?

Similarly to the ‘One in Five’ claim, the EU Commission claims that 90% of child sexual abuse material is hosted on European servers. Again the EU Commission uses this claim to justify its planned CSAM scanning.

However, even experts in this field, the German eco Association that works together with the authorities to take down CSAM (Child Sexual Abuse Material), state that “in their estimation, the numbers are a long way from the claimed 90 percent”. Alexandra Koch-Skiba of the eco Association also says: “In our view, the draft has the potential to create a free pass for government surveillance. This is ineffective and illegal. Sustainable protection of children and young people would instead require more staff for investigations and comprehensive prosecution.”

Even German law enforcement officials are criticizing the EU plans behind closed doors. They argue that there would be other ways to track down more offenders. “If it’s just about having more cases and catching more perpetrators, then you don’t need such an encroachment on fundamental rights,” says another longtime child abuse investigator.

Exaggeration on the verge of fake news

It is unbelievable that the EU Commission uses these exaggerations to swing the public opinion in favor of CSAM scanning. It looks like the argument ‘to protect the children’ is used to introduce Chinese-like surveillance mechanisms. Here in Europe.

But Europe is not China.

What can I do?

In Germany there is at least a spark of hope. A leaked ‘chat control’ document suggests that the current German government will oppose the EU Commission’s proposal.

Now we, as citizens of Europe and members of the civil society, must put pressure on legislators to oppose legislation that will put every email and every chat message that we send under constant surveillance.

And you can do a lot to help us fight for privacy

-

Share this blog post so that everybody understands the false claims made by the EU Commission.

-

Share the video of ‘Digitale Gesellschaft’ so everybody understands that this piece of legislation is completely disproportionate and that there are much better ways to protect the children.

-

Call/email your EU representative to make your voice heard: Stop CSAM scanning on my personal device!

What does CSAM mean?

CSAM refers to Child Sexual Abuse Material, images that depict the sexual abuse and exploitation of children.

Who scans for CSAM?

Several services scan for CSA material on their servers, for instance Google. Since July 6th, 2021, companies in the EU can also voluntarily scan for CSA material.

What are the consequences of CSAM scanning?

Client-side scanning for CSA material will turn your own devices into surveillance machines. If CSAM scanning becomes mandatory, every chat message and every email you ever sent will be scanned and read by others. Client-side scanning, thus, undermines your right to privacy.